AB Testing Landing Pages to Beat Bloated Agencies

- Chase McGowan

- Oct 10, 2025

- 13 min read

A/B testing isn't just about creating two versions of a page to see which one "wins." That’s the textbook definition. In reality, successful testing is driven entirely by the strategy behind the test—a strategy that oversized, generalist agencies often get wrong.

Why Agency A/B Tests Often Fail to Deliver

Does this sound familiar? You hired a big-name agency for your Google Ads, expecting game-changing results, but your landing page performance is completely flat. It's a story I hear constantly from clients who are tired of paying high retainers for zero progress.

The problem usually boils down to their one-size-fits-all approach. It's a system built for their own internal efficiency and scale, not for your unique business growth.

Too often, these bloated agencies stick your account with a junior-level manager armed with a generic "best practices" checklist. They're incentivized to focus on easy, low-impact changes that look good on a monthly report but do absolutely nothing for your bottom line. They're account managers, not strategic specialists.

Surface-Level Changes vs. Strategic Wins

I once took over an account where the previous agency was celebrating a test that changed a button from blue to green. Their big result? A meaningless 1% lift in clicks. They were testing just for the sake of testing, trapped in slow, bureaucratic processes that produced insignificant outcomes while billing you for the hours.

This is a classic agency pitfall—they get obsessed with vanity metrics instead of real ROI because it's easier to report on "activity" than on actual profit.

In contrast, as a dedicated consultant, my first step was to dig into the why. Why was the page underperforming in the first place? It was obvious the core value proposition was muddy and didn't match what the ad creative was promising.

My first test had nothing to do with colors. I rewrote the headline and sub-headline to directly hammer on the customer’s primary pain point.

The result was a 19% increase in qualified leads—not just clicks. That’s the critical difference between an agency checking a box and an expert executing specialized, strategy-led optimization. It’s not guesswork; it’s about deep, hands-on expertise.

The Value of a Specialized Approach

This scenario isn't a one-off. Simply aligning your landing page’s messaging with its ad creative can deliver a 15% higher conversion rate and a 25% bump in return on ad spend. You can find more data-driven optimization insights on VWO's blog. This is exactly the kind of strategic thinking that oversized agencies consistently miss.

This is where an expert consultant has a massive advantage. I’m focused on impactful, strategic changes from day one. You aren't paying for layers of management, fancy offices, or a team that recycles the same template for every client. You’re investing in a single, specialized point of contact whose success is tied directly to yours.

A specialized approach helps you sidestep the common agency traps:

Slow Approval Chains: Bloated agencies have multi-step approval processes that completely kill momentum. My process is lean and fast.

Generic Hypotheses: They test button colors because a blog post said so, not because your data points to a real problem.

Lack of Business Context: A junior account manager will never understand the nuances of your business the way a dedicated consultant does. I take the time to learn your business inside and out.

Ultimately, effective ab testing landing pages requires so much more than software and a checklist. It demands a true strategic partner who can diagnose the real issues and run tests that actually drive meaningful business growth. You can learn more about this philosophy and why an expert consultant outperforms most lead generation marketing agencies.

How to Form a Hypothesis That Drives Real Growth

Any A/B test worth running is won long before you hit "launch." The victory is found in the hypothesis—a sharp, data-backed prediction. This is precisely where bloated agencies cut corners. They’ll test something easy, like a button color, because it looks like work, not because it’s strategically sound.

My approach is different. As an individual specialist, I start with a deep dive into your business goals, and more critically, your actual user behavior. Growth doesn't come from random guesses. It comes from data. We have to become detectives, hunting for the real friction points that stop visitors from converting.

Digging for Data-Driven Clues

Before we even dream up a variation, we need to find the problem. This means going way beyond surface-level metrics and getting into the weeds of how people really use your landing pages.

So, where are the hidden opportunities?

Google Analytics: Look for the anomalies. Is one page bleeding visitors with a crazy-high bounce rate? Are mobile users leaving in droves while desktop users stick around? This data is your treasure map, pointing directly to the problem areas.

Heatmaps and Session Recordings: Tools like Hotjar are my secret weapon here. They show you exactly where people click (or try to click), how far they scroll, and which parts of your page they completely ignore. There's nothing more telling than watching a session recording of a user rage-clicking an element that isn't even a link.

Customer Feedback: Sometimes, the fastest way to find the problem is just to ask. Dive into surveys, read through support tickets, and listen to sales call transcripts. These sources are goldmines for customer objections and confusion that raw data can never fully explain. An agency won't do this; an expert partner will.

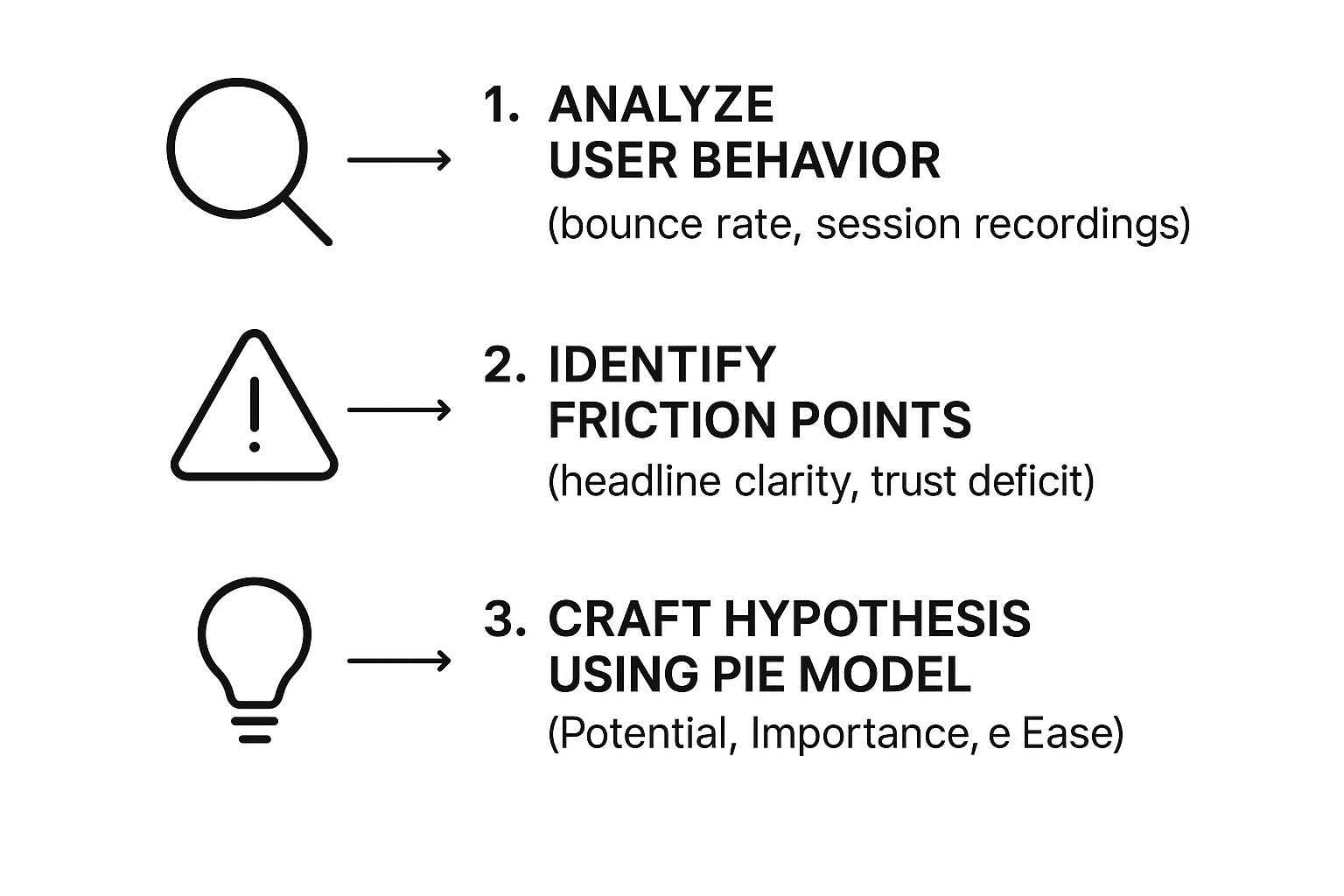

This infographic breaks down the simple, three-step process I use to move from raw data to a rock-solid hypothesis.

As you can see, a powerful hypothesis isn't pulled out of thin air. It’s the direct result of pinpointing friction through careful analysis of user behavior.

Crafting a Powerful Hypothesis

Once the clues are on the table, we can build a hypothesis that actually has a fighting chance. This isn't just a guess; it's an educated statement that defines the change, the expected result, and the reasoning behind it. I use a simplified PIE model (Potential, Importance, Ease) to prioritize which tests will deliver the biggest impact first.

Let’s look at the difference between the typical agency approach and how a specialist operates:

Weak Hypothesis (Agency): "Changing the CTA button to red will get more clicks." This is a shot in the dark, probably based on some generic blog post they read. It's missing the "why" and is completely disconnected from a real user problem.

Strong Hypothesis (Consultant): "By rewriting the headline to match our Google Ad copy and directly address the user's main pain point (cost savings), we can reduce bounce rate by 15% and increase qualified form fills, because current heatmap data shows most users aren't even scrolling past the fold."

See the difference? The second one is specific, measurable, and tied directly to a problem we observed in the data. This strategic foundation is what separates meaningful ab testing landing pages from the expensive busywork that burns your budget. It ensures every test is a chance to learn something valuable and drive real, measurable growth.

Designing and Launching Your First High-Impact Test

Alright, you’ve got a solid hypothesis. Now it's time to put it to the test. This is where we move from strategy to action, and it’s about far more than just cloning your page and changing some text.

To get clean, unambiguous insights, you have to isolate your variables correctly. This is another one of those areas where a lean, expert-led process runs circles around the slow, committee-driven approach you see at big agencies. They love to complicate things to justify their fees.

Big agencies often push for multivariate tests—changing the headline, image, and CTA all at once. It sounds impressive, but this approach requires an insane amount of traffic and usually spits out muddy, confusing data. Frankly, it’s often a way to justify high fees without delivering real value.

For almost everyone, a simple A/B test that isolates one big change is far more powerful. Did the new headline work? Was the shorter form better? Testing one variable at a time gives you a clear, undeniable answer that tells you exactly what to do next.

Choosing Your Landing Page Test Element

When I take on a new project, the first thing I look for are the big-swing opportunities. Agencies often get bogged down in tiny, low-impact tests that produce statistically insignificant "wins." I focus on changes that can actually move the needle.

Test Element | Typical Agency Focus (Low Impact) | Consultant Focus (High Impact) |

|---|---|---|

Headline | Testing a single word change or punctuation. | A complete rewrite targeting a different pain point or value prop. |

Call-to-Action | Changing the button color from blue to green. | Testing a fundamentally different offer (e.g., "Get a Demo" vs. "Download a Guide"). |

Form | Rearranging the order of existing form fields. | Drastically reducing the number of fields to cut friction. |

Hero Section | Swapping one generic stock photo for another. | Replacing a static image with a customer testimonial video. |

The difference is clear: agencies often test to look busy, while a specialist tests to learn and drive growth. It's about strategic bets, not random tweaks.

The Lean Advantage: Single Variable Testing

As a consultant, my process is streamlined and agile. We aren't testing for the sake of it; we are testing to learn and build momentum. That means focusing on one high-impact change per test.

Here’s what that looks like in practice:

A completely rewritten headline and sub-headline to address a different customer pain point.

A redesigned hero section with a new video that builds more trust than the old stock photo.

A simplified lead-gen form with half the fields to reduce friction.

A different offer entirely, like swapping an ebook for a free consultation.

This focused approach gets you actionable results, fast. We don't need a massive team or weeks of meetings to get a test live. When you’re ready, knowing how to boost conversions with split testing is the key to getting it done right.

The principle is simple but powerful: change one significant thing, measure the result, and learn. This lean methodology allows us to build momentum and create compounding gains—a stark contrast to the sluggish pace of a bloated agency.

Setting Up Your Test For Success

Once you’ve built your variation, launching it correctly is non-negotiable. Tools like Unbounce or Instapage can handle the mechanics, but the strategy is what matters.

You have to ensure traffic is split exactly 50/50 between your control and your variation. You also need to double-check everything for technical glitches that could skew your data and waste the entire effort.

Don't underestimate the small stuff. One famous study found that making CTAs look like actual buttons (instead of text links) resulted in 45% more clicks. Another saw a 26% CTR increase just by adding arrow icons. To get reliable data like this, your test setup has to be perfect.

This lean, focused execution is how you build high-converting landing pages that win.

Analyzing Results Like a True CRO Professional

The test is up and running. The data starts trickling in. This is exactly where most big agencies drop the ball.

They’ll send you a PDF a few weeks later with a flashy graphic declaring a “winner,” but they almost always fail to explain the most critical piece of the puzzle: the why behind the numbers. Running the test is just the starting line; the real value is unlocked in how you interpret the story the data is telling you.

This is where the advantage of working with a dedicated expert becomes obvious. We don't just look for a winner. We dig for insights about your customers.

Beyond the Conversion Rate

Just looking at which version got more form fills is surface-level stuff. A true professional looks at the entire picture to understand the full ripple effect of the change.

Did your variation win by a landslide, or was it a statistical coin toss? A bloated agency might celebrate a 2% lift, but was that result even statistically significant?

Statistical significance is a non-negotiable concept in ab testing landing pages. It’s the mathematical proof that tells you whether your result was actually due to the changes you made or just random chance. Declaring a winner before hitting a high confidence level (I never settle for less than 95%) is a classic rookie mistake that leads to terrible follow-up decisions.

Here's the checklist I run through for a proper analysis:

Which version won? First, identify the clear winner based on your primary KPI.

By how much? Pinpoint the exact percentage lift in conversions.

Is it significant? Crucially, confirm the result has hit statistical significance.

What about secondary metrics? Did the test move the needle on time on page, bounce rate, or scroll depth?

What did we actually learn? This is the most important question. What does this result teach us about what motivates our audience—or what scares them away?

The Power of a Failed Test

That last point is everything. I’ve found that sometimes, the most powerful insights come from tests that, on paper, "fail."

I once ran a test for a client where the variation—a page with much more aggressive, benefit-heavy language—actually performed slightly worse than the control.

An agency would have called it a dud, filed their report, and moved on. But I needed to know why.

Digging in, we realized the "losing" variation, while not converting as well, was keeping users on the page 30% longer. They were reading the new copy, and reading it intently. This told us we were on the right track with the core message, but we had probably introduced some new friction or left a key question unanswered.

The "failed" test handed us a powerful insight into a key customer objection we hadn't even realized we needed to address. We used that learning to pivot the messaging on the very next test, which went on to double the page’s conversion rate. That’s the kind of deep learning that fuels real growth—something you'll never get from a surface-level agency report.

Of course, accurate data interpretation starts with flawless data collection. If your backend setup is a mess, your test results are completely useless. For more on this, you can learn how to fix your Google Ads conversion tracking to make sure your analysis is always built on a solid foundation.

From a Single Win to a Flywheel of Growth

Here's the single biggest mistake I see in conversion optimization: treating A/B testing like a one-and-done project. A test finishes, a winner gets picked, and then… nothing. It’s the classic agency model. They ship a report, close the ticket, and move on, leaving so much potential revenue on the table.

Real growth isn't about that one win. It’s about building a continuous cycle of testing, learning, and iterating. This is where a dedicated consultant earns their keep. My goal isn't just to find you a better headline; it’s to build a long-term testing roadmap that generates compounding returns over time.

Because when one test ends, the real work has just begun. The result is just the first clue for what we need to test next.

Systematically Building on Your Wins

So, your new headline won. Great. But what does that mean? It means we've just learned which core message truly connects with your audience. The next step isn't to pop the champagne and stop—it's to ask, "How can we double down on this winning message?"

This is how we turn a single result into a strategic roadmap:

Headline Win: If the winning headline hammered home a specific benefit, our next test is to align the body copy, CTA, and even the imagery with that same powerful idea.

Form Field Win: A shorter form boosted leads? Awesome. The next experiment could be a two-step form to see if we can pre-qualify those leads and improve quality without sacrificing volume.

Image Win: An image of a person beat a simple product shot. Our next hypothesis should be that adding a video testimonial will build even more trust and drive conversions higher.

See how each test informs the next? We’re creating a strategic sequence that turns your static landing pages into finely-tuned conversion machines. It’s a methodical process, not just a bunch of random projects.

A common agency pitfall is "test-and-forget." A dedicated consultant, however, uses each test result as a building block for the next, creating a flywheel of continuous improvement that drives sustained growth.

Unlocking Deeper Insights Through Segmentation

Ready for the next level? It’s all about segmenting your results. A "win" is almost never universal. Did the new version kill it for mobile users but fall flat on desktop? Did it convert traffic from Google Ads but do nothing for your social media visitors?

This is where the magic happens. For example, if we find that mobile users from paid search convert best with a super-concise value prop, we can build a dedicated variant just for them. The data on this is clear—personalization works. In fact, studies show that personalized calls-to-action convert 202% better than generic ones.

More than that, businesses with 31 to 40 landing pages generate seven times more leads than companies with just a handful. This proves that both the quality and quantity of tailored experiences matter. You can dig into more stats about how personalization drives results on KlientBoost.

This granular analysis allows for powerful personalization—the kind of deep work that bloated agencies rarely have the time or incentive to do. It’s how you get beyond basic ab testing landing pages and start building a truly intelligent, data-driven conversion strategy.

Common Questions About Landing Page A/B Testing

Even when you have a solid plan, the same questions always come up right before you hit "go" on an A/B testing landing pages project. Honestly, getting these wrong can tank your results and burn through your budget.

This is where I’ve seen big, process-driven agencies drop the ball. They follow a script, but they miss the specialist's perspective. Let's dig into a few of those common hurdles.

How Long Should I Run an A/B Test?

There's no magic number here. The right answer has everything to do with your traffic volume and hitting statistical significance—not a random two-week guess.

A huge mistake I see all the time is stopping a test early just because one variation pulls ahead after a few days. You have to let it run for at least one, preferably two, full business cycles to account for weird daily or weekly swings in user behavior.

But the real gatekeeper is the data. You absolutely must reach a statistically significant sample size before you can confidently call a winner. Any good consultant will use a calculator to figure this out beforehand, making sure the decision is based on cold, hard data, not a gut feeling. That’s the kind of discipline that often gets lost when an agency is juggling dozens of clients at once.

Will A/B Testing Hurt My SEO?

Nope, not if you know what you're doing. In fact, Google actually encourages testing to improve user experience. The catch is that you have to set it up correctly on the technical side, a detail generalist agency teams sometimes gloss over.

The proper way to handle this is by using a tag on your variation page. This little piece of code tells Google, "Hey, this is just a test version of the original, not duplicate content." It’s a simple step that prevents you from getting penalized.

It's also crucial to avoid "cloaking," which is showing Google's bots one version of your page while users see something different. As a consultant whose job is to look at your whole business, I make sure every single test is SEO-safe from day one.

An agency might get hyper-focused on the conversion rate of the test itself, but a true partner makes sure your testing doesn't sabotage your long-term organic traffic. It’s a small technical detail with massive consequences.

How Many Tests Can I Run at Once?

For almost every business I’ve worked with, the answer is simple: run one test on a single page at a time. It's so tempting to test a new headline and a new CTA in separate tests on the same page, but you'll completely contaminate your data. You'll never know for sure which change was responsible for the lift (or the drop).

Sure, massive companies like Amazon use complex multivariate testing, but for most of us, a focused, one-at-a-time approach gives you clean, actionable data. The goal isn't to run fancy, complicated experiments; it’s to get clear answers you can build on. I always prioritize getting reliable learnings over muddy data from tests that look impressive but teach you nothing.

If you really want to dive deep into the nuts and bolts, this comprehensive guide to Divi A/B testing breaks down the process in great detail.

Ready to stop wasting your ad spend on landing pages that don't convert? At Come Together Media LLC, I provide the one-on-one, specialized Google Ads expertise that oversized agencies simply can't match. Schedule a free consultation today and let’s build a testing strategy that delivers real, measurable growth.

Comments